Rust LLM Kubernetes

Project Link: Click this link

Description

This project contains a dockerized sentiment analysis application that uses Open LLM on Hugging Face with Rust Actix Web Services and Kubernetes.

This application uses an open-source model, distilbert/distilbert-base-uncased-finetuned-sst-2-english, from Hugging Face that allows users to prompt with a json payload that contains their desired text. The model returns the sentiment, either NEGATIVE or POSITIVE as label, and a confidence score, ranging from 0 to 1. This allows the end user to understand the sentiment of the text they input and then conduct further analysis based on the confidence score by setting a desired threshold based on their use cases.

Application Flowchart

Demo

Please click on the image below to view the demo video.

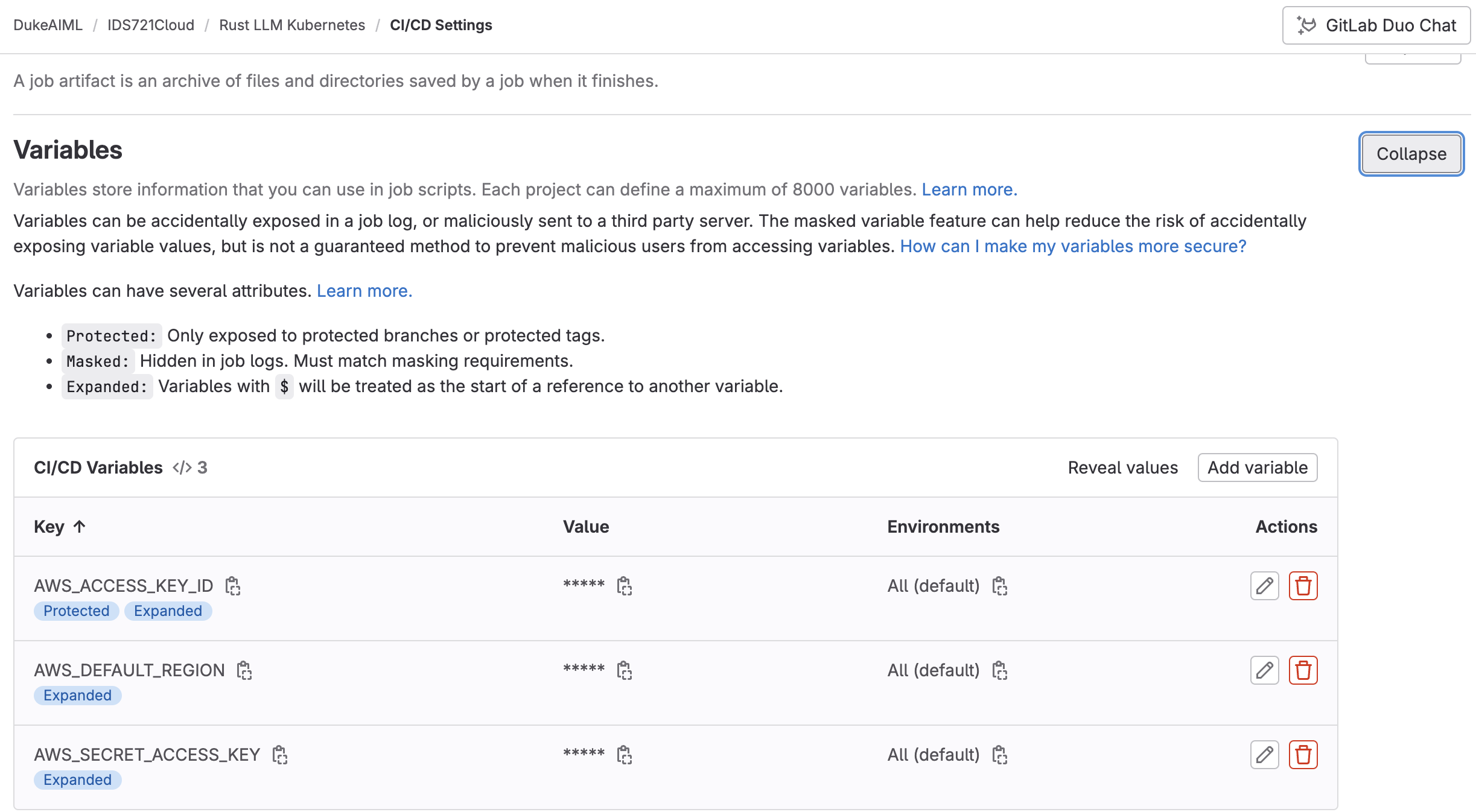

CI/CD Pipeline on GitLab to AWS

We use GitLab CI/CD to build and push the Docker image to AWS ECR and deploy the application to AWS EKS. With the pipeline and CI/CD configuration, i.e. variables as shown below, we can automate the deployment process.

AWS API Endpoint

curl -X POST -H "Content-Type: application/json" -d '{"text": "Wow, we love AWS Cloud Services"}' http://a5555caf8cc7242889bff784915d1bd9-1162981651.us-east-1.elb.amazonaws.com:8080/sentiment

Azure API Endpoint

curl -X POST -H "Content-Type: application/json" -d '{"text": "I do think MIDS is great, but what do you think? Huh?"}' http://llmrustazure-dns-ihr99kcw.hcp.eastus.azmk8s.io:8080/sentiment

Running Locally

Once started the application with cargo run, you can access the application at http://localhost:8080.

Using the following command, you can send a POST request to the application with a JSON payload containing the text you want to analyze.

curl -X POST -H "Content-Type: application/json" -d '{"text": "I love rust, how about you?"}' http://localhost:8080/sentiment

Then you should see the following response, as shown in the screenshot below:

{"Sentiment Analysis":[{"label":"POSITIVE","score":0.9936991930007936}]}

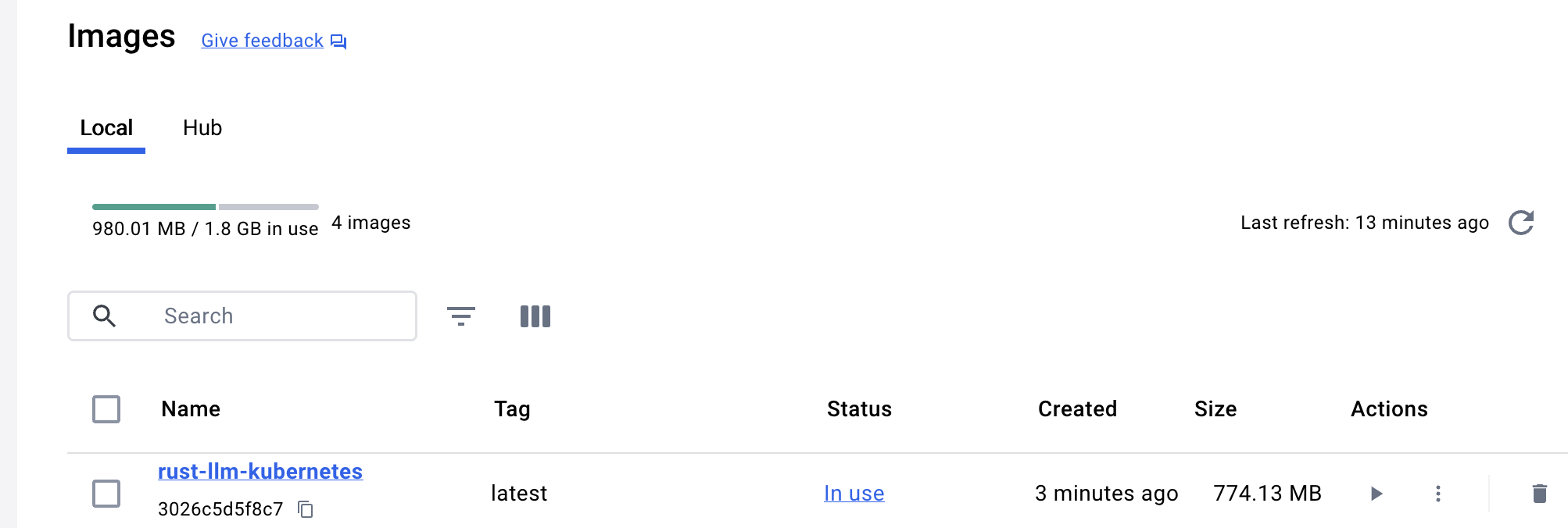

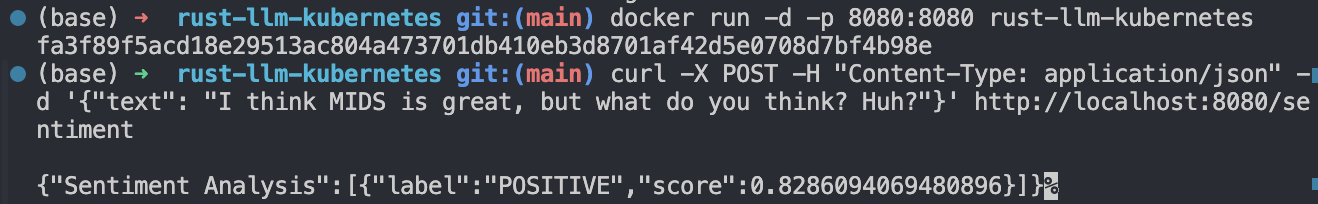

Running on Docker Container

You can also run the application in a Docker container.

First, build the Docker image using the following command:

docker build -t rust-llm-kubernetes .

Then run the Docker container using the following command:

docker run -d -p 8080:8080 rust-llm-kubernetes

After it is started, you can access the application at http://localhost:8080 and send a POST request to the application with a JSON payload containing the text you want to analyze.

curl -X POST -H "Content-Type: application/json" -d '{"text": "I think MIDS is great, but what do you think? Huh?"}' http://localhost:8080/sentiment

Then you should see the following response, as shown in the screenshot below:

{"Sentiment Analysis":[{"label":"POSITIVE","score":0.8286094069480896}]}

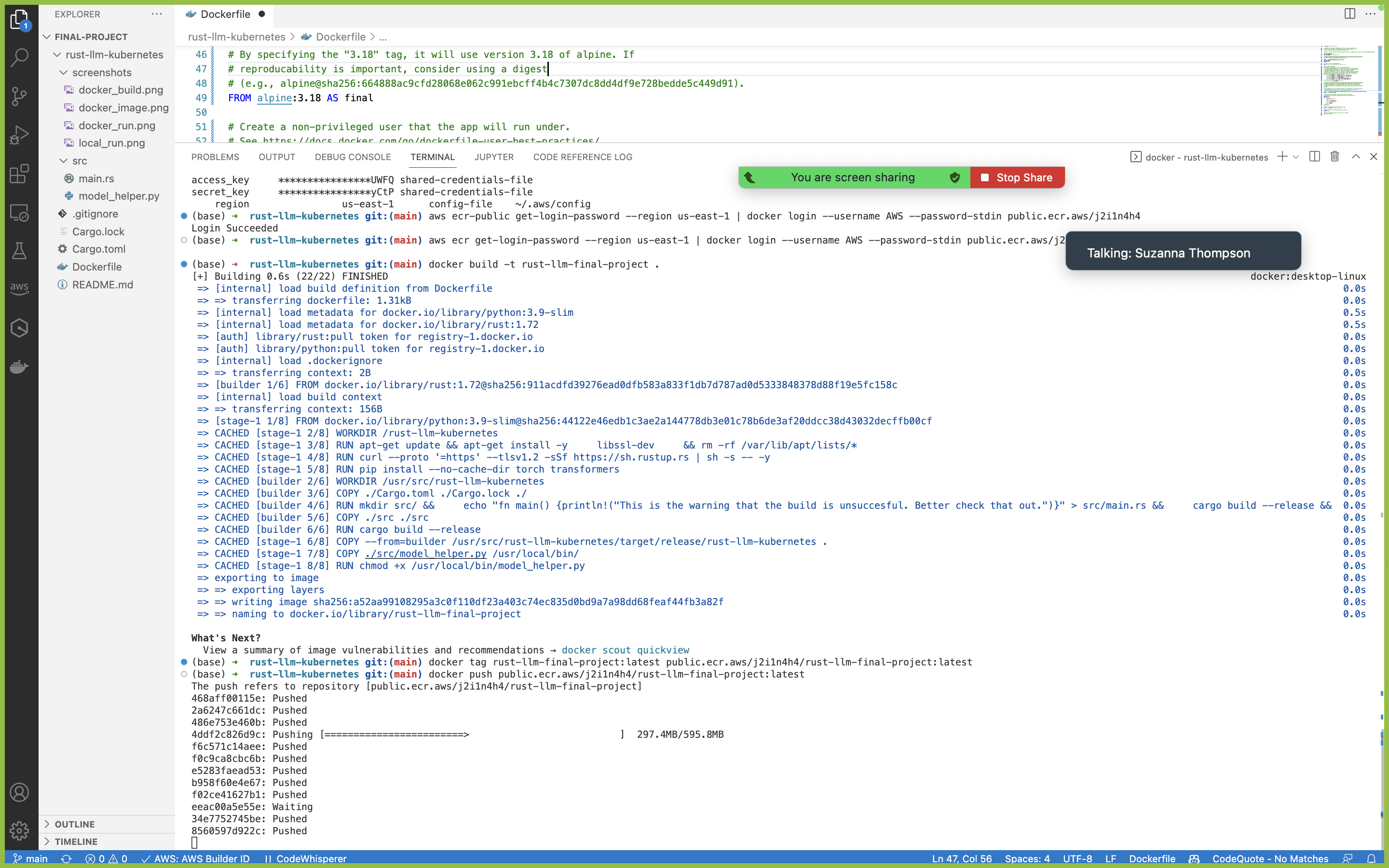

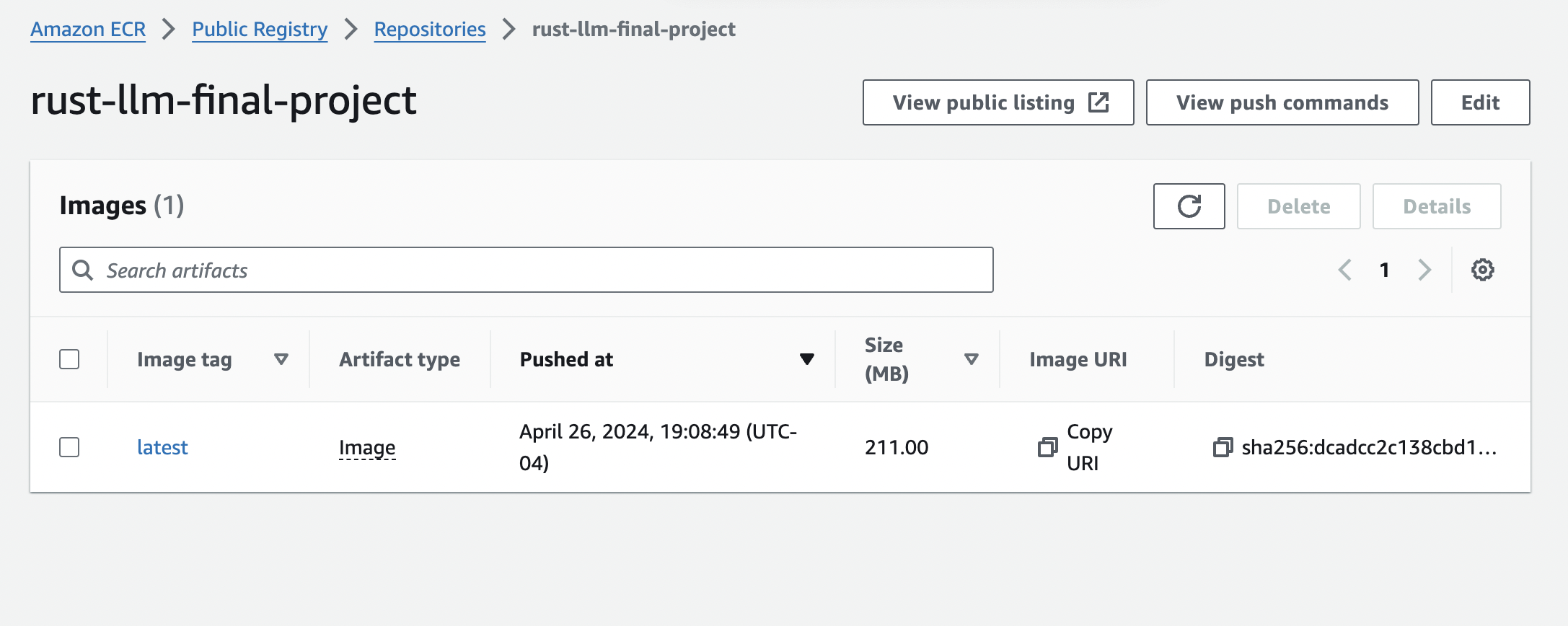

Deploying on AWS ECR

This project was pushed to AWS Elastic Container Registry in a public repository.

This makes the container publically available.

Below is an image of the public repository.

Below is an image of the AWS Elastic Kubernetes Services and the associated working pods.

After creating the Kubernetes clusters, we needed to grant the appropriate permission policies, in particular the eks:DescribeCluster (that was enabled through a JSON inline upload, which is titled describe_cluster_policy in the image below).

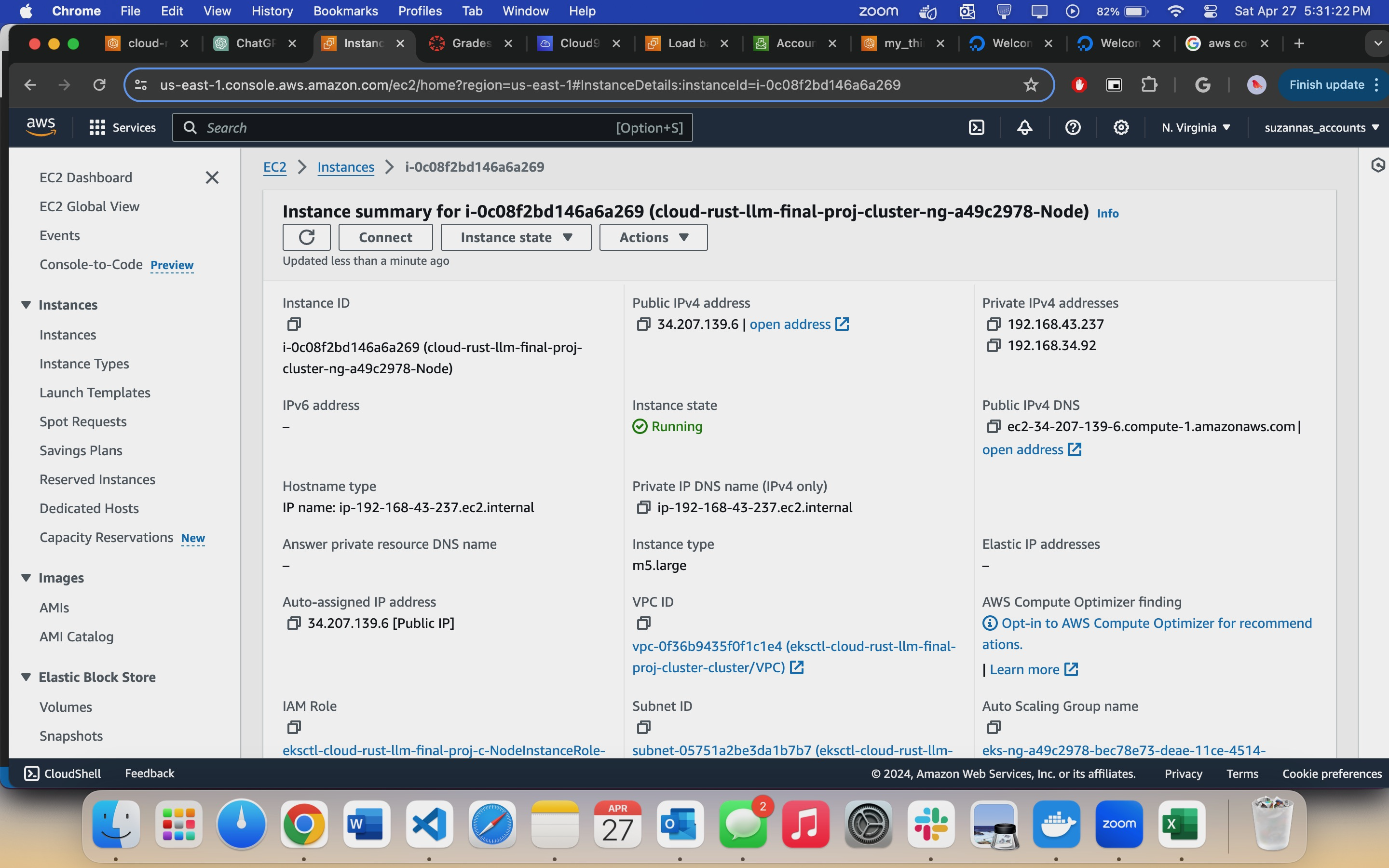

Below is an image of the AWS EC2 instances we created for this project as computing resources.

To ensure our computing resources were utilized efficiently, we also set up a load balancer.

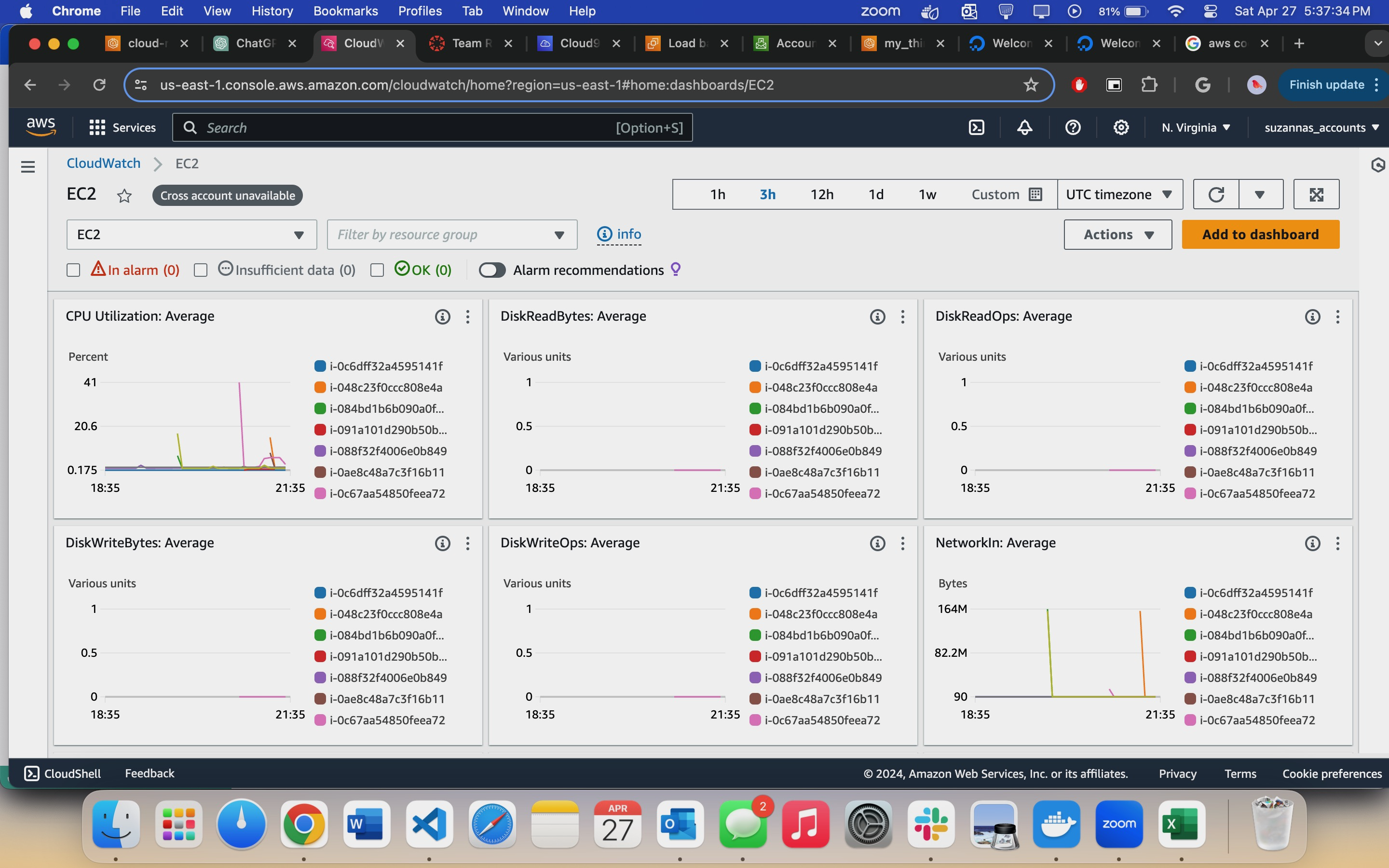

To monitor our application/program, we set up CloudWatch alarms.

Deployed to Azure

This project was also pushed to Azure Container Registry in a public repository.

Below is an image of the repository.

While the Azure Kubernetes Service is running, you can see the log as shown below.

Azure Logging